With ever more complex software and hardware in our embedded systems, it may seem that worst-case execution time analysis is something that would be left until the very end of the software lifecycle. However, finding out about temporal problems at such a late stage is usually problematic, not only because of how much commitment has been made to a particular design, but also because the changes needed to get software to meet an execution time requirement are often much bigger than the equivalent changes to meet a functional requirement.

Ideally, you should aim for much earlier investigation of the execution time behaviour. For example:

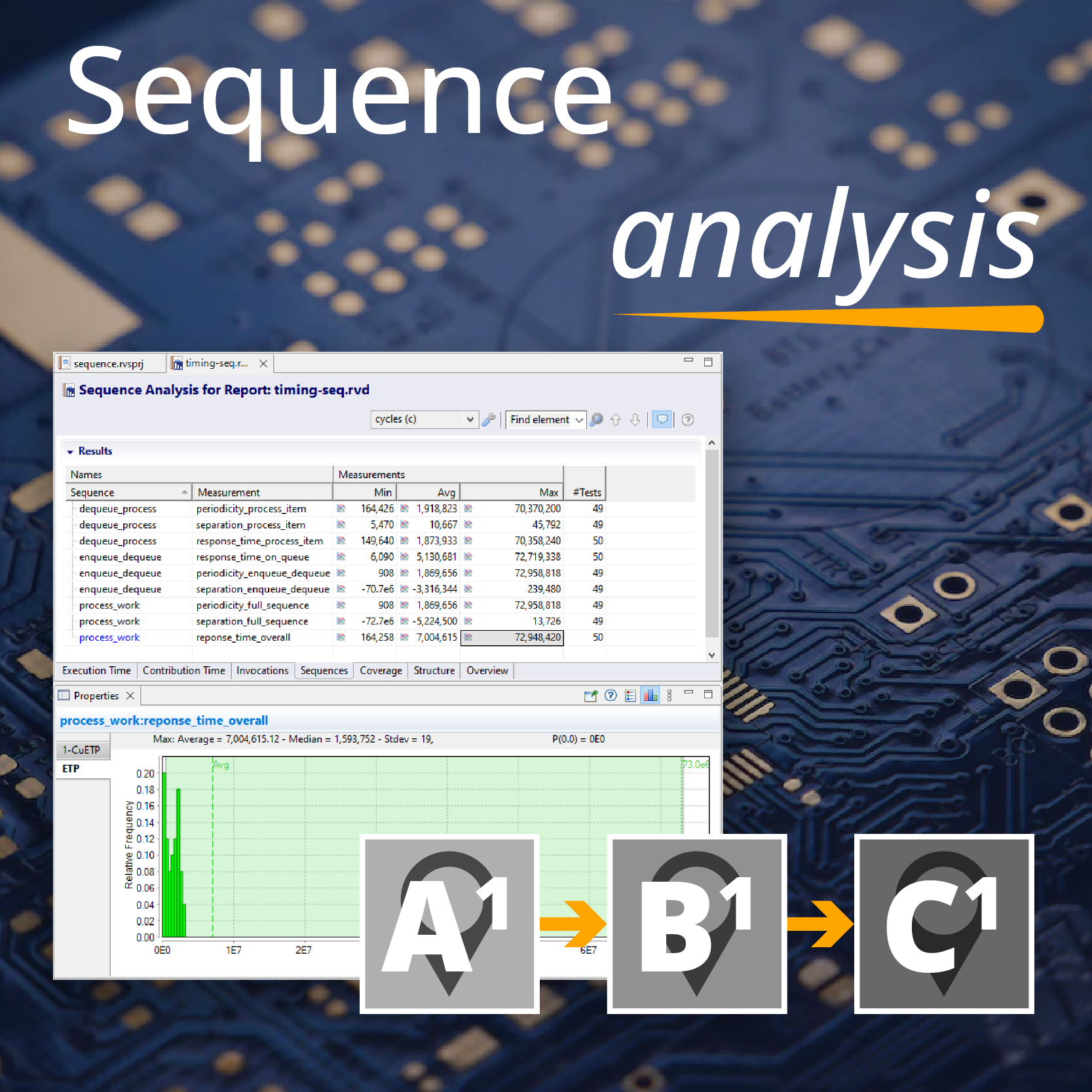

- with host-based execution, you can look at relative execution times of different parts of the software, how execution time scales in practice with the input data, and whether the same code called from different locations has vastly different execution times;

- with a modest simulator, even with only part of the software, you can look at the impact of the RTOS and application on one another. Are scheduling priorities being observed? Are tasks being released when expected? Could tasks be spread out to better even the worst-case load? The timing information also gives a good vehicle for talking about the software execution to systems engineers;

- with development hardware, you can look at the detailed schedulability, including the impact of hardware facilities on the software behaviour. Cache has a big impact, as do access latencies for different hardware devices, but different platforms all seem to have unexpected behaviours that show up clearly in the timing data.

These are just a few cases; in practice there is a continuum of different options for execution time analysis deployment, and the earlier it is introduced, the quicker you can start to control your timing uncertainty.

SAIF Autonomy to use RVS to verify their groundbreaking AI platform

SAIF Autonomy to use RVS to verify their groundbreaking AI platform

Hybrid electric pioneers, Ascendance, join Rapita Systems Trailblazer Partnership Program

Hybrid electric pioneers, Ascendance, join Rapita Systems Trailblazer Partnership Program

Magline joins Rapita Trailblazer Partnership Program to support DO-178 Certification

Magline joins Rapita Trailblazer Partnership Program to support DO-178 Certification

How to certify multicore processors - what is everyone asking?

How to certify multicore processors - what is everyone asking?

Data Coupling Basics in DO-178C

Data Coupling Basics in DO-178C

Control Coupling Basics in DO-178C

Control Coupling Basics in DO-178C

Components in Data Coupling and Control Coupling

Components in Data Coupling and Control Coupling

DO-278A Guidance: Introduction to RTCA DO-278 approval

DO-278A Guidance: Introduction to RTCA DO-278 approval

ISO 26262

ISO 26262

Data Coupling & Control Coupling

Data Coupling & Control Coupling

Verifying additional code for DO-178C

Verifying additional code for DO-178C

DO-178C Multicore In-person Training (Bristol)

DO-178C Multicore In-person Training (Bristol)

XPONENTIAL 2025

XPONENTIAL 2025

Avionics and Testing Innovations 2025

Avionics and Testing Innovations 2025

DO-178C Multicore In-person Training (Fort Worth, TX)

DO-178C Multicore In-person Training (Fort Worth, TX)