Coverage vs. Correctness

Structural coverage analysis and object code verification are very different activities that demonstrate different things and for which different approaches are required.

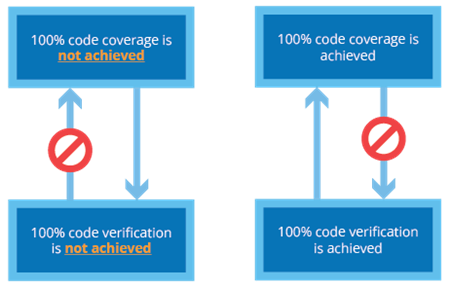

Structural coverage analysis helps provide assurance that sufficient software testing has been performed. Its aim is to demonstrate that 100% of the code has been covered during requirements-based testing. The level of coverage achieved does not demonstrate the correctness of the code – this is demonstrated by the results of the requirements-based tests that are run, and during which structural coverage is observed.

Object code verification, on the other hand, is an activity to demonstrate the correctness of additional code introduced by the compiler. The first step to being able to demonstrate this is understanding which structural elements in the object code map to which structural elements in the source code – this is known as object code to source code traceability.

Validation of COTS Ada Compiler for

Safety-Critical Applications

Compilers for the Ada programming language are already subject to a compiler conformity assessment, which shows that a given compiler correctly implements the Ada language specification. However, this assessment does not consider the suitability of the generated code for deployment to safety-related applications.

Learn how Rapita conducted a successful Ada compiler validation project.

DownloadObject code to source code traceability and object code verification

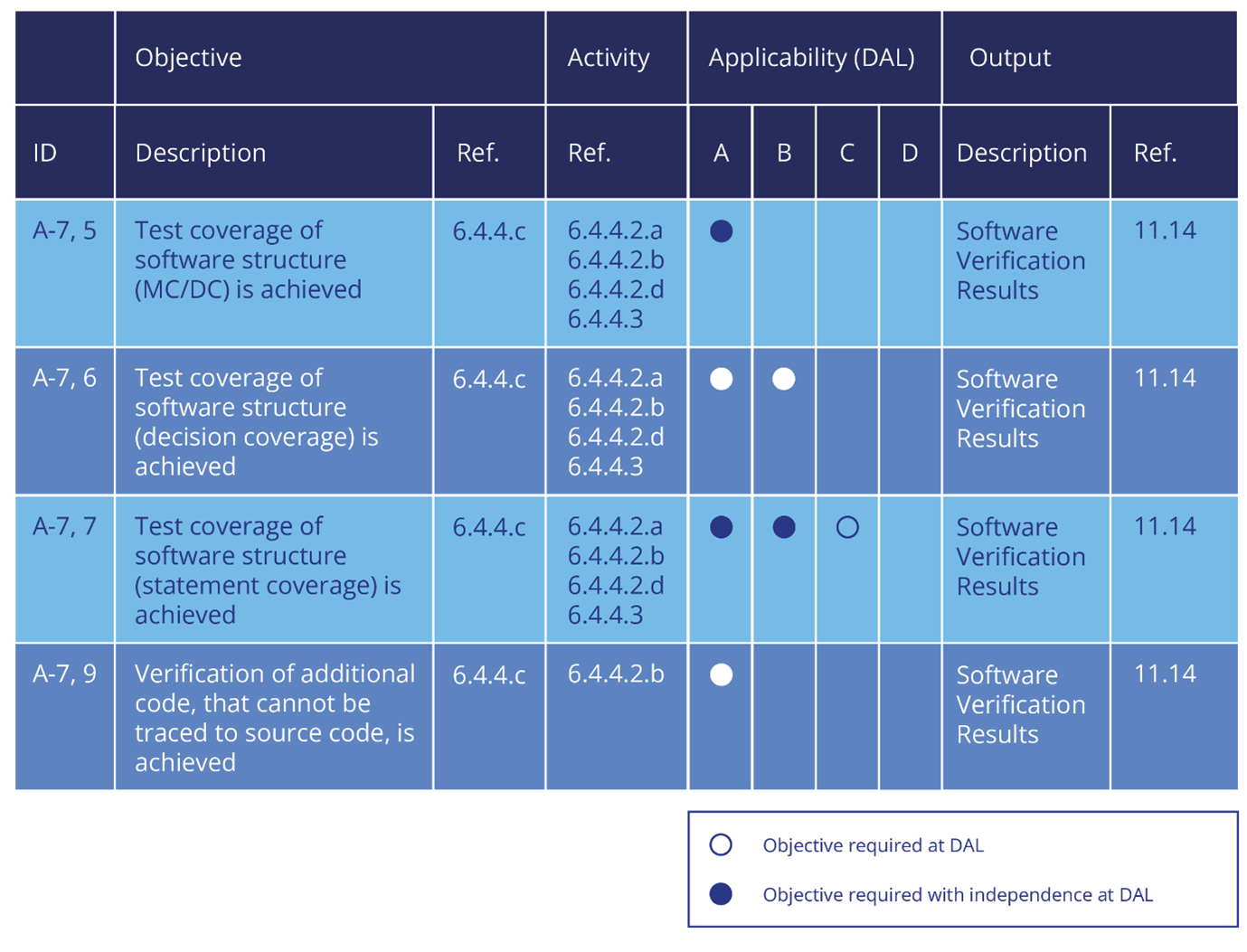

DO-248C, sometimes referred to as the DO-178C “FAQ”, highlights object code to source code traceability as a necessity for object code verification.

“When performing structural coverage at the Source Code level for Level A software, it is necessary to establish whether or not the object code is directly traceable to the Source Code.”

DO-248C 4.12.1

To establish this traceability, you’ll need to analyze all source code elements and all corresponding object code elements. DO-248C clarifies that the analysis “may not be trivial”, should consider things including “the extent of code optimization, compiler switch options, and object code inserted by the compiler”, and that the process “is intensive and should be thorough”.

Some software automation tools may be helpful in supporting traceability analysis by providing side-by-side views of the source code and the corresponding object code instructions.

DO-248C presents two potential approaches to traceability analysis, which appear different at first glance, but somewhat similar on closer inspection: compiler verification, and target object build verification. A hybrid of the two approaches could also be taken.

Compiler verification

One approach to traceability analysis suggested by DO-248C is to analyze the behavior of the compiler based on the compiler options and programming language constructs used, determine traceability based on these, and identify all potential additional code that doesn’t map to source code. That additional code can then be verified.

“One approach to this analysis is to produce code with fully representative language constructs of the application (for example, case statements, if-then-else statements, loops, etc.) and to analyze the resultant object code. Also, it is important that the individual constructs are completely representative of the constructs used in the target object code build, including complex structures (for example, nested routines).”

DO-248C 4.12.2.2

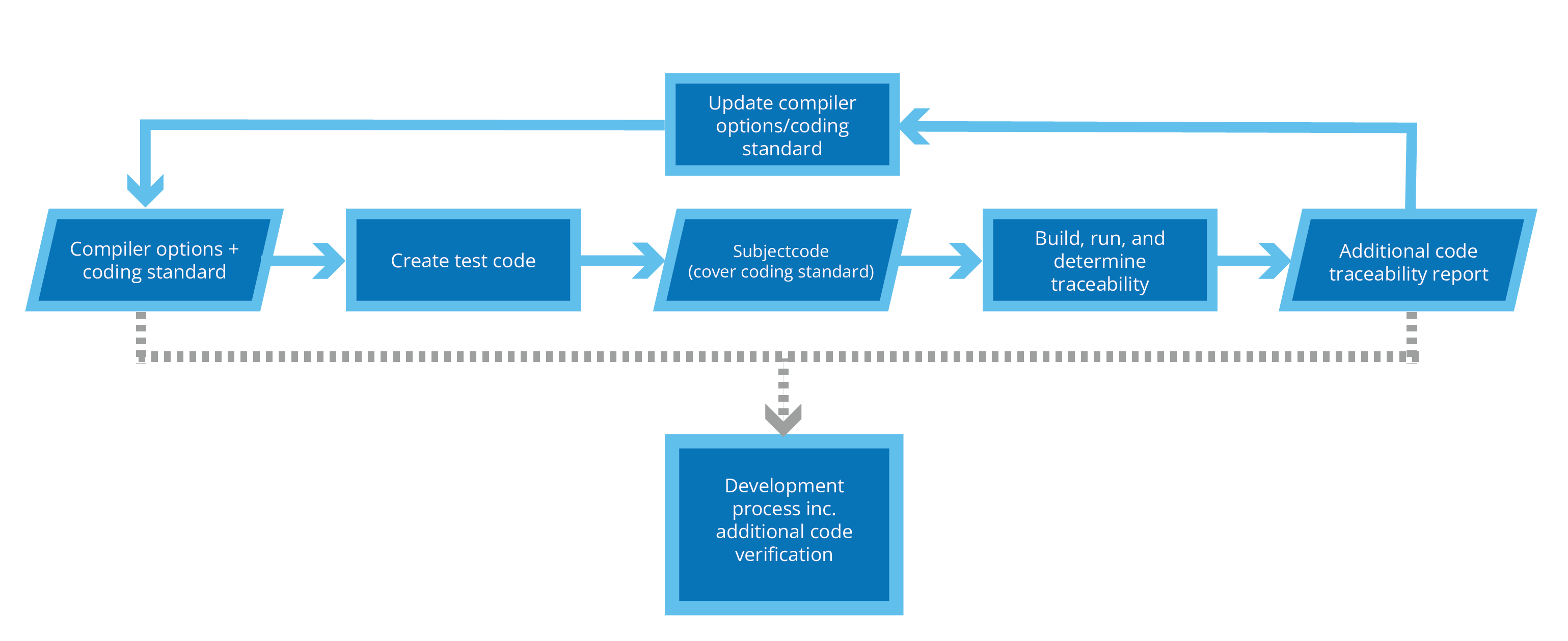

This process should involve thorough identification of the compiler options and language constructs used to develop software. The compiler verification process may elicit modification of the compiler options or coding standard to minimize traceability discrepancies and reduce the burden on verification of additional code.

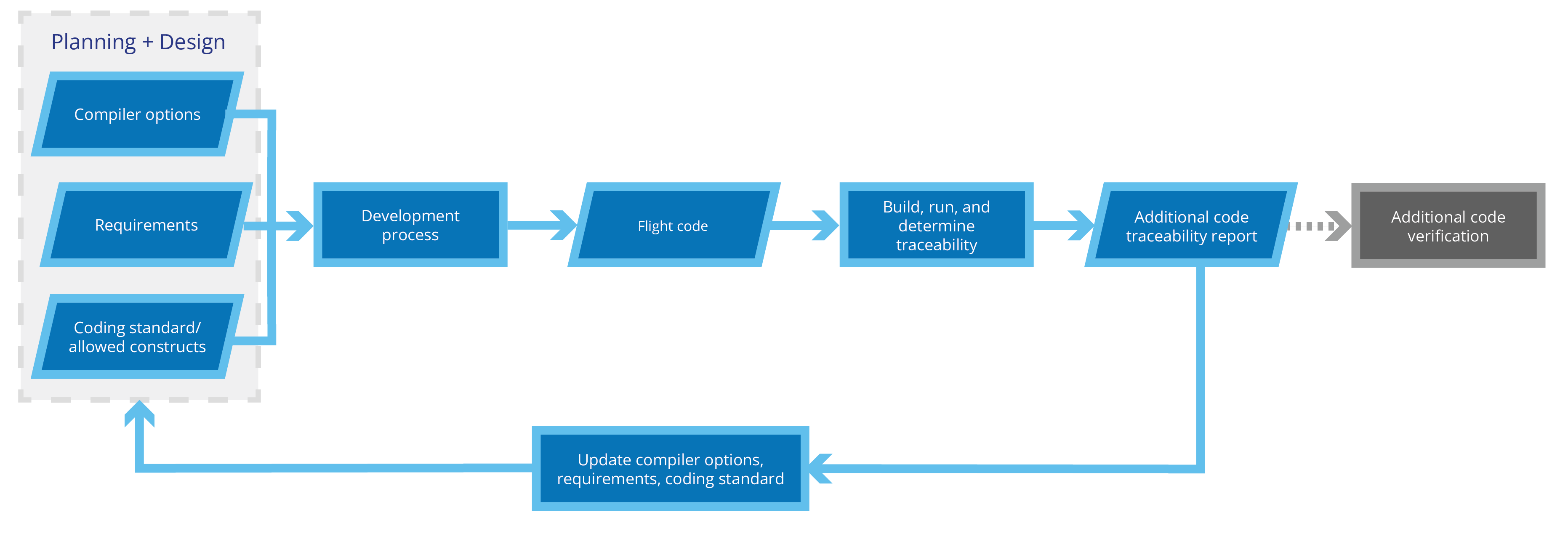

The aim of compiler verification is to identify the source code constructs that generate additional code structures. Starting with a set of compiler options and a coding standard, code constructs can be placed in the different contexts in which they can appear in a program.

After compiling such code, you can examine the resulting object code to identify what source code constructs, if any, will yield additional code constructs in the object code. You may also feed information back to adjust the coding standard and the chosen compiler options to eliminate the use of such source code constructs. Based on the additional code discovered in this way, you can create guidance on how to specify and verify this code through your normal software development life cycle.

While the process is effort-intensive, once it has been done for a specific compiler and coding standard, it doesn’t need to be repeated – you can continue to use the same compiler, compiler options, and language constructs (based on a curated coding standard) without additional verification in future projects.

Target object build verification

The second suggested approach suggested by DO-248C for traceability analysis is to “examine the target object code build” (DO-248C 4.12.2.2). Rather than verifying all potential behaviors of the compiler and code, this focuses analysis on the specific code used.

The aim here is to take the object code that results from the software development process and examine this directly to identify any additional code constructs in the object code. If such object code exists, then you go back to the software development process, update the requirements to specify the correct behavior of the additional code constructs, and then verify that the code meets those requirements. You may also update the compiler options and coding standard used to attempt to eliminate or mitigate additional code.

An advantage of this approach may be that it’s less involved than compiler verification for a single iteration of the process, but this is counterbalanced by a major disadvantage: the whole process would need to be repeated if any changes are made to the source code or compiler options.

Hybrid verification

The major cost with target object build verification is the unexpected discovery of object code introduced by the compiler late in the development life cycle, and the major cost associated with up-front compiler verification is the number of cases needed for a thorough analysis. An alternative approach here is to still rely on the target object build verification, but supplement this with up-front analysis. In the initial analysis, you could focus on verification cases that are likely to cause the introduction of additional code. This will help you choose a coding standard, compiler, and compiler settings that minimize the likelihood of ending up with unexpected additional object code that’s expensive to verify.

As the assurance case for this approach is based on target object build verification, this approach can increase flexibility. For example, you might deploy compiler verification across several projects using the same compiler. The first project would assess only the most common risk areas, while later projects would cover increasingly many cases to build up to a full compiler verification.

Verifying additional code

After selecting your compiler, compiler options, and coding standards, you may still have some additional compiler-introduced code. Examples of additional code introduced by the compiler can include things like virtual call dispatch code, compiler-inserted checks such as array index bound or type compatibility checks, and run-time library support code. No matter the type of additional object code, you’ll need to verify its correctness.

Neither DO-178C nor DO-248C provides specific guidance on how to verify the correctness of additional code introduced by the compiler.

As the purpose of object code verification is to verify the correctness of additional code, it stands to reason that the first step should be to introduce derived requirements for that additional code.

Then, you can either expand your requirements-based test suite to verify those requirements, verifying testing sufficiency using structural coverage analysis, or verify the requirements through analysis and resolve coverage gaps (features in your coverage analysis tool, such as RapiCover’s justifications mechanism may support this).

How we can help

Rapita Systems provides compiler verification services to support object code verification. Through this service, we identify compiler options and provide recommendations on modifications to compiler options and coding standards to reduce the amount of additional code and thus burden for verifying it. See our case study for more information. We can also support verification of additional code, identifying it, and verifying its correctness through requirements-based testing or analysis.

RapiCoverZero, our automated tool for object code structural coverage analysis, supports verifying additional code generated by compilers to meet the DO-178C objective. The tool automates coverage analysis of object code during requirements-based testing, and supports source to object code traceability by letting you view source code and corresponding object code side by side. Both RapiCoverZero, and RapiCover, our automated source code structural coverage analysis tool, include a justifications feature that lets you mark additional code you have verified by manual analysis as having been verified, and includes this in results exports submissible for DO-178C.

Rapita System Announces New Distribution Partnership with COONTEC

Rapita System Announces New Distribution Partnership with COONTEC

Rapita partners with Asterios Technologies to deliver solutions in multicore certification

Rapita partners with Asterios Technologies to deliver solutions in multicore certification

SAIF Autonomy to use RVS to verify their groundbreaking AI platform

SAIF Autonomy to use RVS to verify their groundbreaking AI platform

RVS gets a new timing analysis engine

RVS gets a new timing analysis engine

How to measure stack usage through stack painting with RapiTest

How to measure stack usage through stack painting with RapiTest

What does AMACC Rev B mean for multicore certification?

What does AMACC Rev B mean for multicore certification?

How emulation can reduce avionics verification costs: Sim68020

How emulation can reduce avionics verification costs: Sim68020

How to achieve multicore DO-178C certification with Rapita Systems

How to achieve multicore DO-178C certification with Rapita Systems

How to achieve DO-178C certification with Rapita Systems

How to achieve DO-178C certification with Rapita Systems

Certifying Unmanned Aircraft Systems

Certifying Unmanned Aircraft Systems

DO-278A Guidance: Introduction to RTCA DO-278 approval

DO-278A Guidance: Introduction to RTCA DO-278 approval

NXP's MultiCore for Avionics (MCFA) Conference

NXP's MultiCore for Avionics (MCFA) Conference

DO-178C Multicore In-person Training (Heathrow)

DO-178C Multicore In-person Training (Heathrow)

Avionics and Testing Innovations

Avionics and Testing Innovations

Certification Together International Conference (CTIC)

Certification Together International Conference (CTIC)