"Data coupling" and "control coupling" (collectively “DCCC”) concern the way software components interact with each other in an integrated system to perform a higher-level function. Understanding software DCCC and analyzing DCCC coverage during integration testing can help reduce development costs and mitigate risks, and DCCC analysis is required for DO-178C certification of critical avionics. Rapita Systems is developing RapiCoupling, an automation solution designed to meet the complexities of DCCC analysis for modern DO-178C software.

This is the second in a series of blogs in which we explore Data Coupling and Control Coupling (DCCC) and DCCC analysis. In this blog, we cover why, unlike for meeting many DO-178C verification objectives, there’s no standard approach for Data Coupling and Control Coupling Analysis.

The two schools of thought in DCCC analysis

Opinion about what DCCC analysis is, and should be, vary, but there are two main schools of thought on it.

The first school of thought considers DCCC as a code coverage metric, preferably one that can be defined universally. This is analogous to other coverage criteria such as MC/DC. However, the search for an objective criteria has proven elusive and divisive, and this is partly because of the diversity in the kinds of inter-component interface we see in modern airborne systems. Components can interact in many ways, via the OS and hardware, and computations can be sequential, scheduled (multithreaded) or concurrent, and these factors can lead to different types of coupling being relevant.

The second school of thought accepts that there is no one-size fits all metric for DCCC. Instead, it considers DCCC a higher-level framework through which interface characteristics can be analysed and common problems eliminated. Overlap with other DO-178C objectives is acknowledged, and there is an explicit recognition that DCCC is not just about tests and code coverage, but also involves other artefacts such as the architecture and its relationship to the code. DCCC analysis within this framework may involve manual reviews and potentially different forms of automation. The approach is typified by the “Rierson list”, a list of high-level checks based on common sources of potential problems, that can form the basis of DCCC (Rierson 2013).

The two schools of thought represent extremes in a spectrum of opinion. In practice, DO-178C projects often employ an approach drawing on aspects of both, some with more emphasis on the first school of thought, and some the second.

| DCCC as a Structural Coverage Criteria | DCCC as a Higher-level framework | |

|---|---|---|

| Aim | Demonstrate sufficiency of testing according to quantifiable metrics | Demonstrate sufficiency of testing by considering known sources of defects during software integration |

| Applicability | Metrics only available for specific interface types | Framework is applicable generally, although requires instantiation for specific interfaces |

| Basis | Source code | Architecture, Source code, Data Dictionaries, Low-level requirements, Platform characteristics |

| Primary Evidence | Traceability from tests to coverage goals | Traceability from tests to coverage goals. Evidence from manual review and other analyses |

| Coverage criteria | Automatic derivation of coverage goals based on pre-defined criteria. | Manual; Process-based |

| Completion criteria | 100% coverage goals observed or justified | Process completion criteria achieved |

Defining coverage criteria

What is central to any interpretation of DCCC is that there should be a criterion against which the completeness of testing can be justified. Views differ on how that criterion is defined, and what project-specific inputs are required to define it, but there is no disagreement about whether it should exist or not.

Criteria can be defined in terms of couplings, where we define a coupling as being an interaction between components that needs to be observed in testing and traced to the tests that exhibit the interaction. For example, a coupling might be defined as a possible call over the interface between two components, or as a write to a variable on one side of the interface followed by a corresponding read from the same variable on the other side of the interface.

Note that we prefer the term “coupling” as opposed to “couple”, as in the mechanism by which a locomotive is attached to its rolling stock. “Couple” can be confused with “pair”, which perhaps overly-restricts the way we think about component interactions. We will explore couplings in more detail in a future blog post.

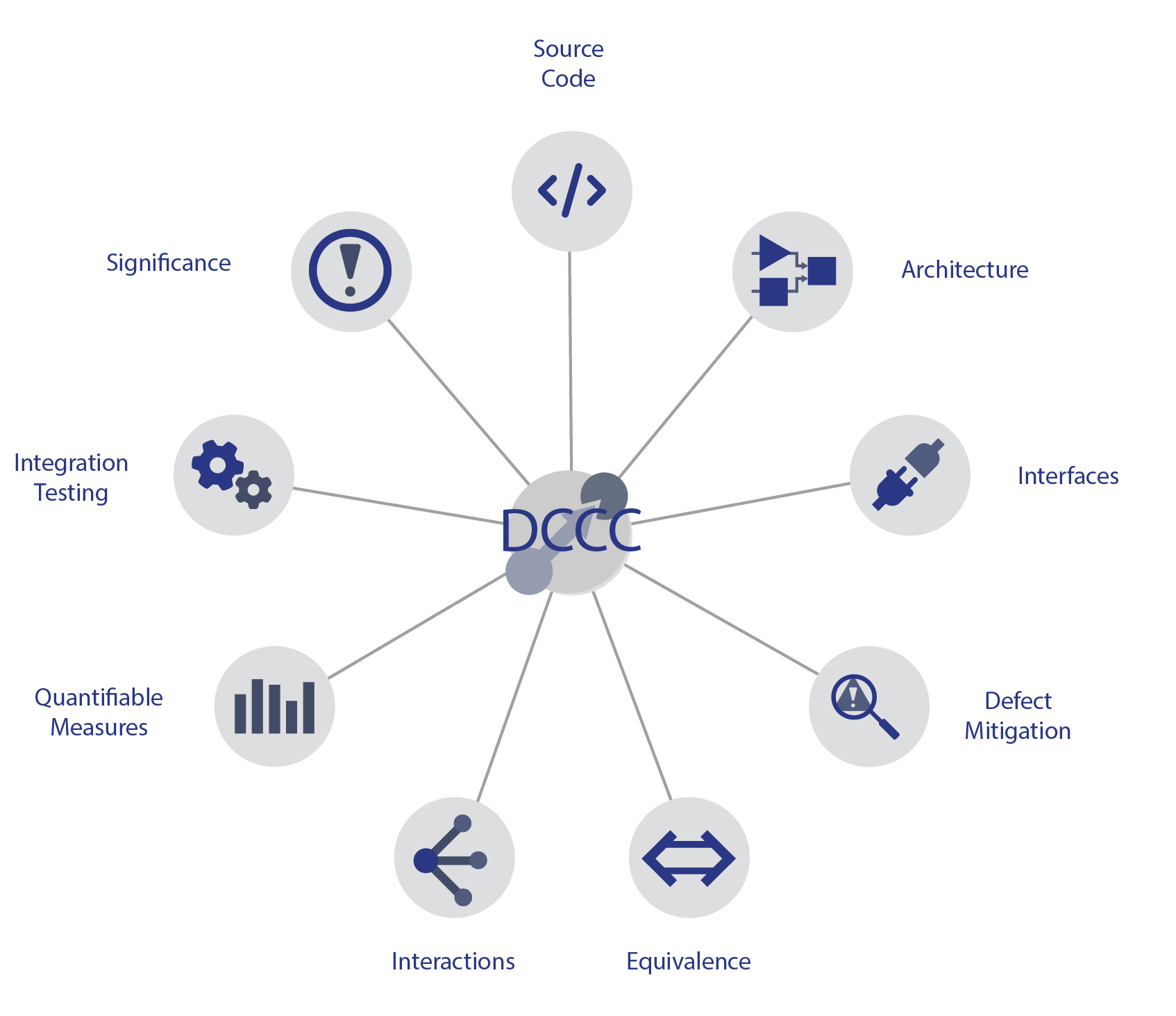

Couplings represent the significant interactions between components, all of which need to have been covered during integration testing. Defining significant is challenging, but it is critical to performing effective DCCC analysis. Significance can be driven and affected by a number of factors, including how control is switched or shared over an interface, the role the application, OS and hardware plays within the interface, and/or the kinds of defect commonly encountered. Significance is heavily influenced by architectural considerations as well as code, and may even draw on outputs from safety-related activities.

Understanding what is significant can involve answering questions for which there is no straightforward answer. The basis for these for these questions roughly involves two kinds of consideration:

- How is the interface intended to operate?

Which rules govern the correct usage of the inter-component interfaces in your software? What does one side of the interface rely on and what, in turn, does the other side guarantee? (These are sometimes referred to as interface requirements.)

What must you observe during testing to have confidence that these rules are respected?

Examples of things you’ll need to consider include range and relationship constraints on shared and passed data, as well as call sequencing constraints.

- Which behaviors are representative?

Which behaviors must you observe to be confident that a fully representative set of interactions have been exercised during testing and therefore your testing is complete?

How can you justify that each class of behavior you want to observe during testing is equivalent based on your notion of significance?

Some considerations for this include patterns and locality of reads and writes, parameter handling, treatment of compound objects, depth of exploration of interface, equivalence classes (on parameters or return values), and interrupt patterns.

Preview

Preview

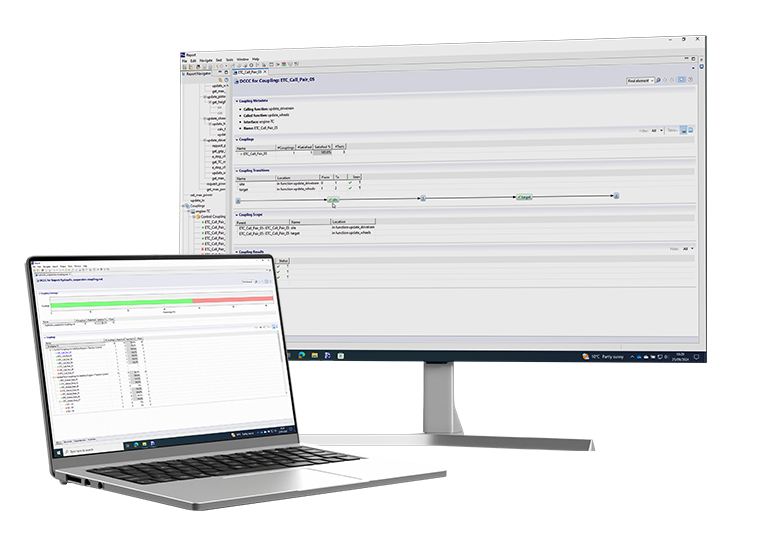

An innovative new approach for DCCC Analysis

- Data coupling and control coupling analysis for DO-178C

- Configurable definition of components, interfaces and couplings

- Process guidance to help define couplings for your project

In our next blog, we’ll look at the importance of components in DCCC – what they are, how they are derived, and what impact they have on DCCC coverage analysis.

Wanting to learn more about DCCC? Check out our full blog series or download our DCCC Solutions for DO-178C Product brief.

Want to stay up to date with DCCC content? Sign up to our mailing list below.

References

Rierson, L. (2013) Developing Safety-Critical Software: A Practical Guide for Aviation Software and DO-178C Compliance. CRC Press

Rapita System Announces New Distribution Partnership with COONTEC

Rapita System Announces New Distribution Partnership with COONTEC

Rapita partners with Asterios Technologies to deliver solutions in multicore certification

Rapita partners with Asterios Technologies to deliver solutions in multicore certification

SAIF Autonomy to use RVS to verify their groundbreaking AI platform

SAIF Autonomy to use RVS to verify their groundbreaking AI platform

What does AMACC Rev B mean for multicore certification?

What does AMACC Rev B mean for multicore certification?

How emulation can reduce avionics verification costs: Sim68020

How emulation can reduce avionics verification costs: Sim68020

Multicore timing analysis: to instrument or not to instrument

Multicore timing analysis: to instrument or not to instrument

How to certify multicore processors - what is everyone asking?

How to certify multicore processors - what is everyone asking?

Certifying Unmanned Aircraft Systems

Certifying Unmanned Aircraft Systems

DO-278A Guidance: Introduction to RTCA DO-278 approval

DO-278A Guidance: Introduction to RTCA DO-278 approval

ISO 26262

ISO 26262

Data Coupling & Control Coupling

Data Coupling & Control Coupling

DASC 2025

DASC 2025

DO-178C Multicore In-person Training (Fort Worth, TX)

DO-178C Multicore In-person Training (Fort Worth, TX)

DO-178C Multicore In-person Training (Toulouse)

DO-178C Multicore In-person Training (Toulouse)

HISC 2025

HISC 2025