"Data coupling" and "control coupling" (collectively “DCCC”) refer to the way software components interact with each other in an integrated system to perform a higher-level function. Understanding software DCCC and analyzing DCCC coverage during integration testing can help reduce development costs and mitigate risks, and DCCC analysis is required for DO-178C certification of critical avionics. Rapita Systems is developing RapiCoupling, an automation solution designed to meet the complexities of DCCC analysis for modern DO-178C software.

In previous posts we have looked at different general interpretations of what DCCC means and introduced the concept of a software component and what it means for DCCC. In this post, we’ll take a closer look at control coupling by considering some basic examples.

Control coupling is defined in DO-178C as follows:

“Control Coupling is the manner, or degree, by which one software component influences the execution of another software component.”

But what does this actually mean?

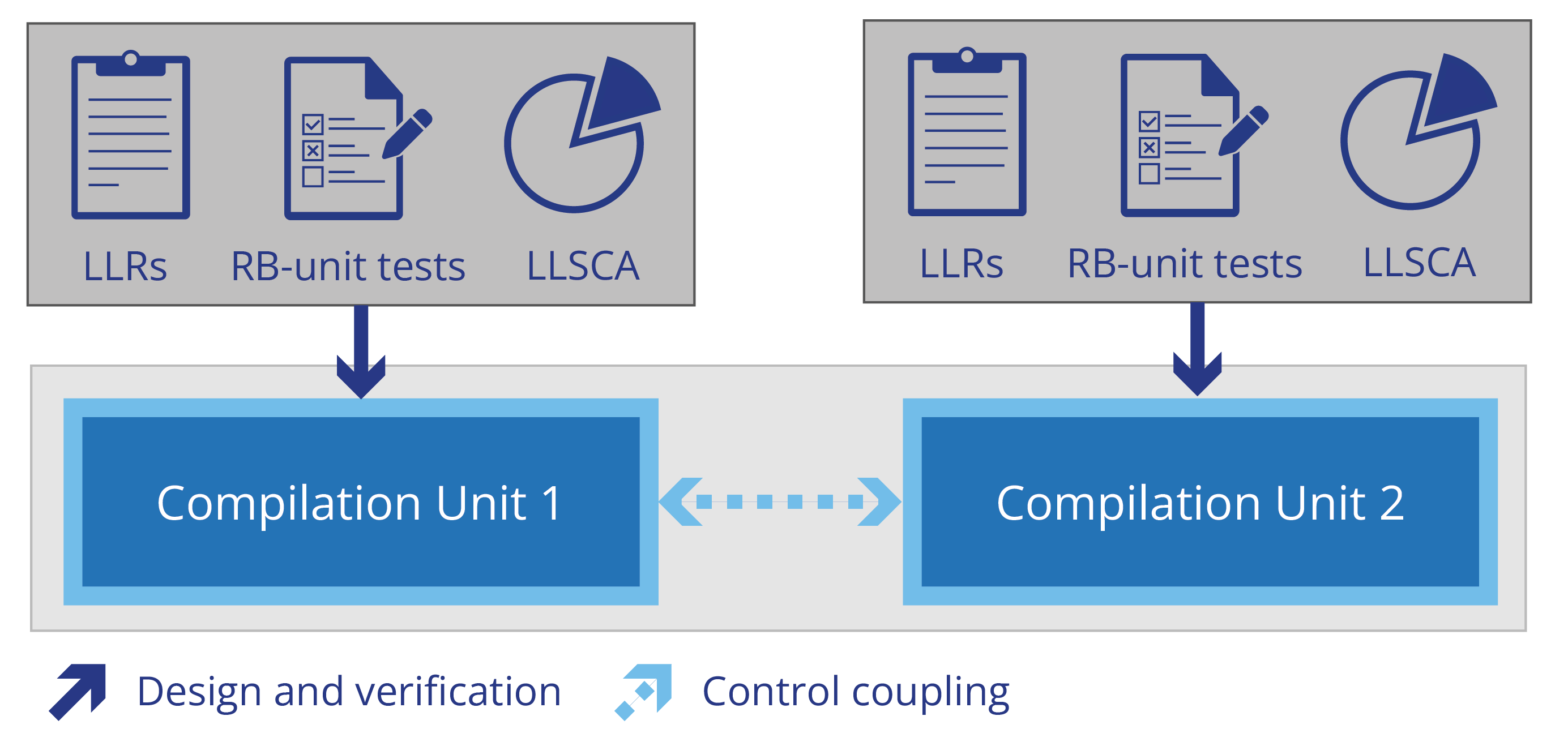

The ways one software component can influence the execution of another depend on the way the software components are integrated. The simplest way software components are integrated is via a single-threaded execution model, where each software component’s functions can call functions in other components (including pre-compiled libraries) which execute and return control back to the calling component. For single-threaded code, it is common for the components in the software architecture to correspond to compilation units in the build system, which are verified by requirements-based unit tests and assembled via a linker (Figure 1).

Software components can be integrated in more complex ways, for example interrupt processing, multi-tasking and multi-threading. We’ll take a closer look at some of these execution models in subsequent posts.

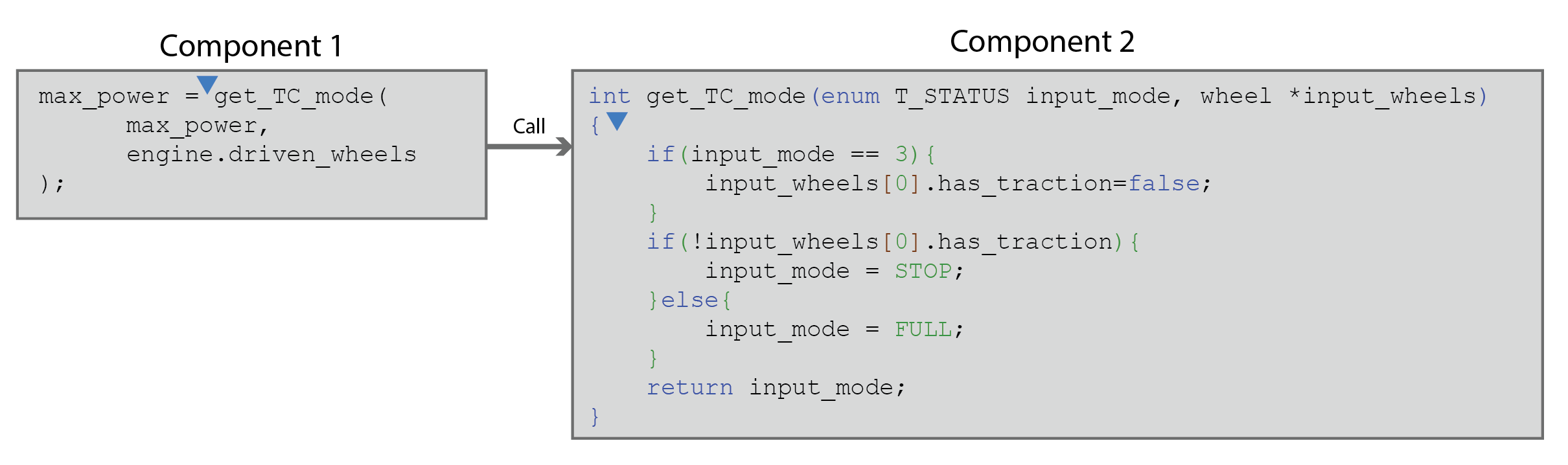

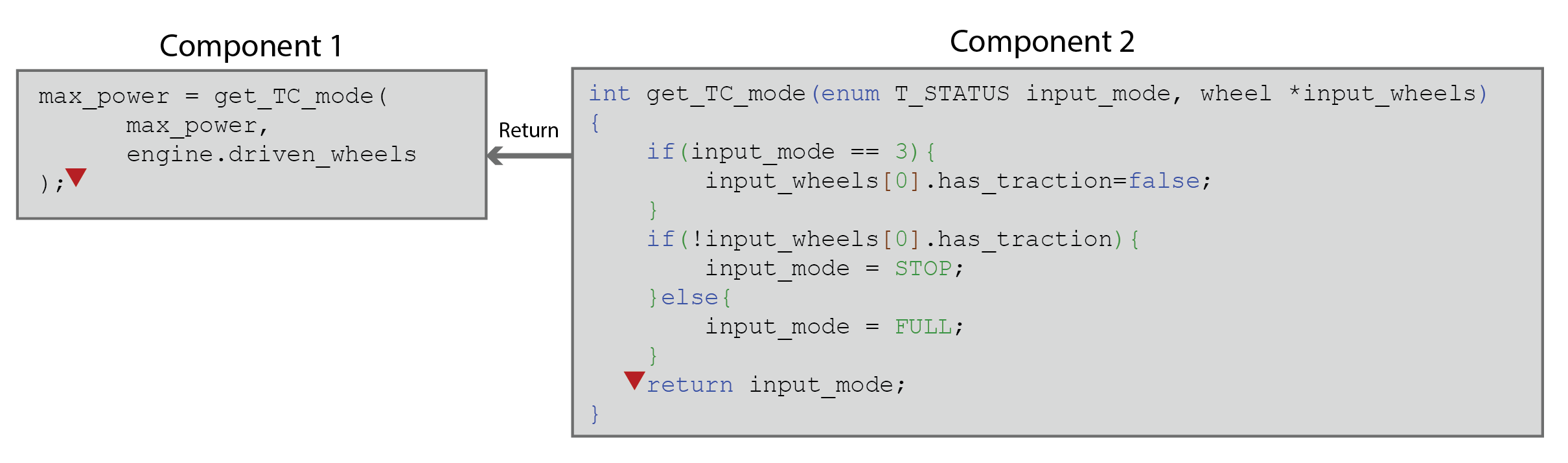

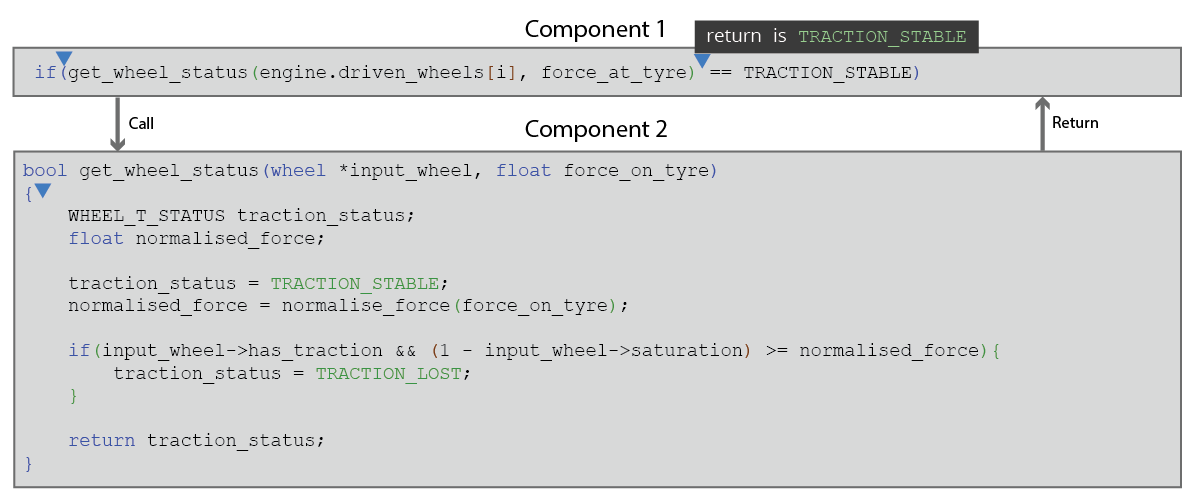

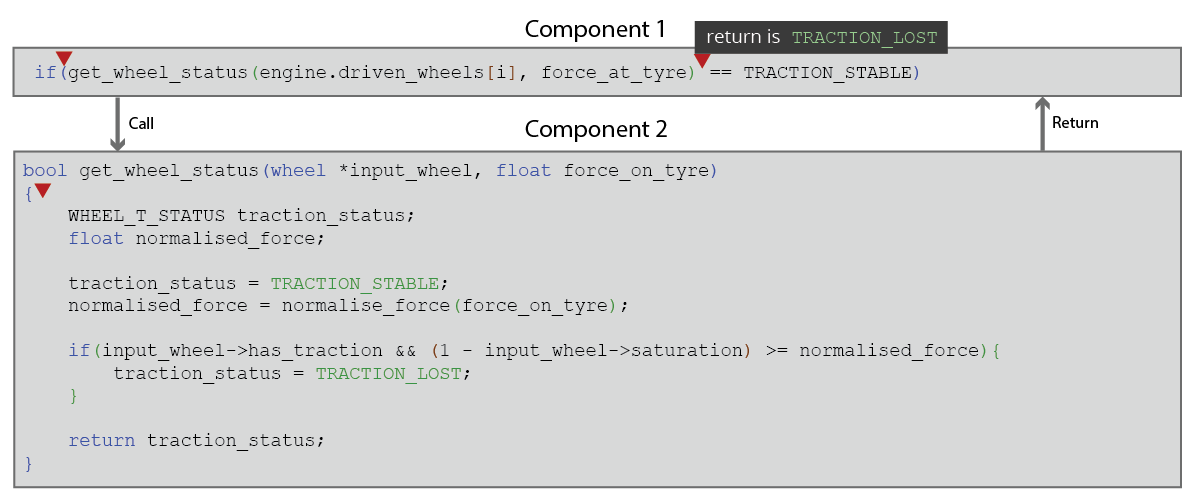

Control coupling is one of the ways of demonstrating that integration testing is sufficiently comprehensive. For those who mainly consider control coupling to be a coverage criteria, the minimum analysis requirement for software components integrated by a call-return model is generally accepted to be that every transfer of control (both calls and returns) over a component boundary has been observed in at least one test (Figures 2a and 2b; Note - triangle markers in the figures below show important parts of program execution flow that should be observed during testing).

Complications to this coverage definition include indirection, when a function call is made via a pointer or other context dependent mechanism, such as dynamic dispatch. For indirect calls, the transfer of control to every potential target from every call site must be observed. Additional complexity can be introduced by language-specific non-standard control flow, such as exception handling or constructs such as setjmp/lngjmp.

To provide greater assurance, you can extend the definition of coverage, raising the bar on the behaviors that must be observed in testing. One way to do this is to think about the interface as more than just the transfer of control and also consider the important code behaviors immediately following on from the call or return, or even immediately before it. After a transfer of control, for example, a target function may have different behaviors (defined by their own low-level requirements) depending on the data supplied over the interface. The scope of what you consider to be part of the interface is sometimes referred to as depth of interface in the literature.

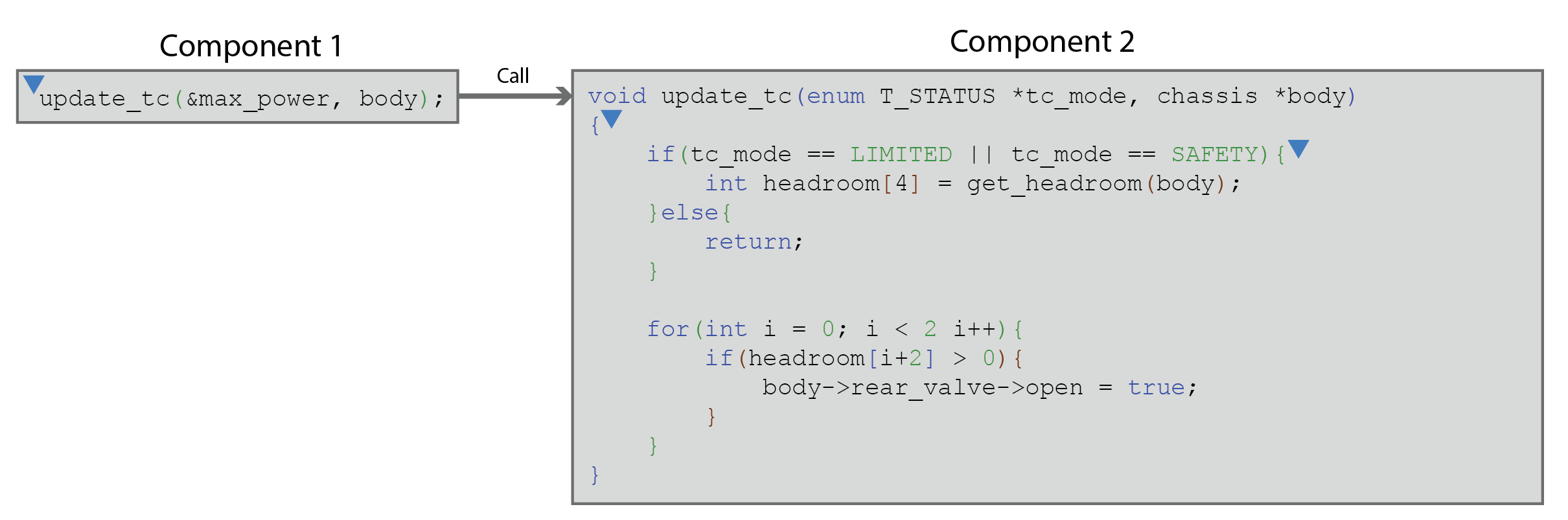

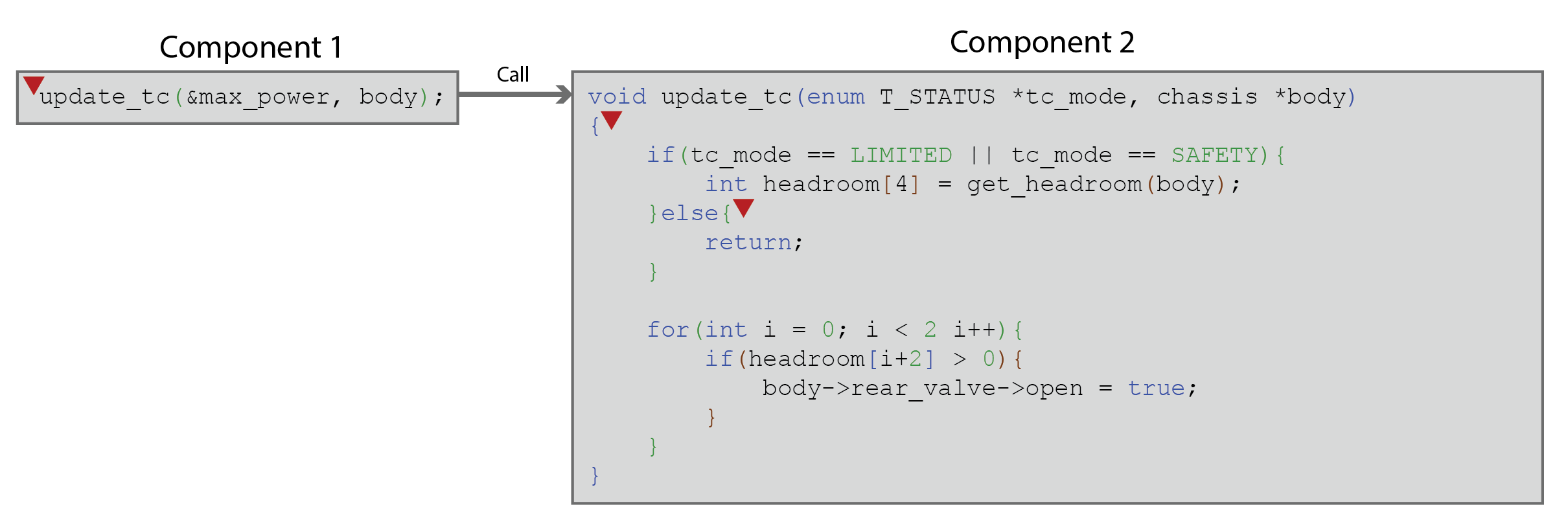

One way to incorporate depth of interface into DCCC analysis is to think about how data affects control flow within the interface itself, and observe the branches associated with that control flow being exercised. This is sometimes referred to as c-uses, partial decision coverage or partial branch coverage, and it is normally associated with the modes the interface operates in, as defined by enums, flag data items, or equivalence class boundaries – for example, how dispatch logic works. An example of this is shown in Figures 3a and 3b.

Another way to provide greater assurance is to require observation of all of the possible return values from a function, or the different variable settings at the point of return. As these may heavily influence control flow downstream of the call, potentially resulting in very different behaviors, they can be regarded as part of the interface and therefore an important consideration in control coupling. An example of this might be distinguishing return values indicating success from those indicating unsuccessful results or failures and seeking to observe both in testing (Figures 4a and 4b).

Whether, and how, you use these higher-bar coverage criteria ultimately depends on what evidence you want to provide to meet the DCCC objective, or satisfy yourself that your integration testing is complete.

The most general interpretation of control coupling is that it is a means of providing evidence of the absence of defects associated with control flow. Common defects for call-return type interfaces might include, for example:

- Getting the order of execution wrong

- Executing conditional code in the wrong circumstances

For control coupling purposes, we are mainly concerned in how components are integrated over an interface, and potential defects can be interpreted contractually: when one component relies on something from another component, is the other component always guaranteed to respect it?

In this context, control coupling analysis amounts to providing sufficient evidence that control coupling contracts are correct. They may involve manual review and other forms of static analysis, but the bulk of the evidence is usually supplied via integration testing. For the types of defect mentioned above, for example, we might be interested in:

- Have we captured the ordering requirements the calling component needs to respect on the interface, and does testing provide sufficient evidence that those ordering requirements are met?

- Have we captured the conditions under which functions expect to be called (their preconditions), and does testing provide sufficient evidence that those conditions are respected?

One of the practical complexities associated with this sort of analysis is arguing what constitutes sufficient evidence? For example, is a single confirmatory test that the call sequencing is respected sufficient? If not, how do we know we have considered all of the significant cases? Answering this question convincingly is the key to a strong DCCC argument, whether you are applying coverage criteria or focusing on defect types.

Preview

Preview

An innovative new approach for DCCC Analysis

- Data coupling and control coupling analysis for DO-178C

- Configurable definition of components, interfaces and couplings

- Process guidance to help define couplings for your project

In conclusion, control coupling analysis might seem like a straightforward topic at first glance – simply ensure you have tests that exercise all of the calls over component boundaries. However, when one considers the significant control interactions provided by the interface, and interface types more complex than call-return interfaces, many questions arise that have no clear answer. It is ultimately up to each certification applicant to determine (and agree with their DER) how they are going to approach control coupling and what coverage metrics they provide as evidence.

In the next blog in this series, we’ll look at data coupling in more detail, again focusing on basic call-return interfaces.

Wanting to learn more about DCCC? Check out our full blog series or download our DCCC Solutions for DO-178C Product brief.

Want to stay up to date with DCCC content? Sign up to our mailing list below.

Magline joins Rapita Trailblazer Partnership Program to support DO-178 Certification

Magline joins Rapita Trailblazer Partnership Program to support DO-178 Certification

Eve Air Mobility joins Rapita Systems Trailblazer Partnership Program for eVTOL projects

Eve Air Mobility joins Rapita Systems Trailblazer Partnership Program for eVTOL projects

Rapita Systems launches MACH178 Foundations for multicore DO-178C compliance

Rapita Systems launches MACH178 Foundations for multicore DO-178C compliance

Collins Aerospace and Rapita present award winning paper at DASC 2024

Collins Aerospace and Rapita present award winning paper at DASC 2024

Data Coupling Basics in DO-178C

Data Coupling Basics in DO-178C

Control Coupling Basics in DO-178C

Control Coupling Basics in DO-178C

Components in Data Coupling and Control Coupling

Components in Data Coupling and Control Coupling

The ‘A’ Team comes to the rescue of code coverage analysis

The ‘A’ Team comes to the rescue of code coverage analysis

DO-278A Guidance: Introduction to RTCA DO-278 approval

DO-278A Guidance: Introduction to RTCA DO-278 approval

ISO 26262

ISO 26262

Data Coupling & Control Coupling

Data Coupling & Control Coupling

Verifying additional code for DO-178C

Verifying additional code for DO-178C

DO-178C Multicore In-person Training (Fort Worth, TX)

DO-178C Multicore In-person Training (Fort Worth, TX)

DO-178C Multicore In-person Training (Toulouse)

DO-178C Multicore In-person Training (Toulouse)